Many applications and programming languages support JSON (JavaScript Object Notation) for data transfer and storage. Once you have BigQuery data in JSON, you can use it with any custom app or service.

This is helpful in many scenarios, such as:

- Using BigQuery data with web-based tools or dashboards

- Feeding live data into reporting and BI tools

- Building a custom data pipeline from BigQuery to other sources

- Analyzing BigQuery data outside the platform

and more…

In this article, you’ll find all the ways to connect BigQuery to JSON. This includes a no-code option to automate the integration.

Methods to connect BigQuery to JSON

You can choose a method to get BigQuery data in JSON format suitable for your use case from the following:

- Coupler.io – This is a no-code data integration and automation platform that connects over 60 data sources (like BigQuery) to JSON, spreadsheets, BI tools, and other destinations. You can set up a live integration to access JSON data via URL, which updates automatically on a fixed schedule. The exported data follows a structured schema compatible with various applications. The tool allows you to automate exports of queries from BigQuery to JSON with just a few clicks, all without requiring any coding skills.

- Manual data export – Use native options in the BigQuery interface to export query results to JSON. This method is suitable for ad-hoc requirements where you don’t want the data to update automatically.

- BigQuery API – You can write a custom script using the BigQuery API to retrieve data in JSON format. Furthermore, this data flow can be automated via cron jobs on the server. However, this advanced method requires coding knowledge, making it unsuitable for business users.

Now, let’s explore each of these methods for BigQuery data export in detail, starting with the one that lets you automate the data flow without coding.

How to automate data exports from BigQuery using Coupler.io

Step 1: Connect to BigQuery account

To get started, click Proceed in the form below. It will take you to a pre-configured importer for BigQuery to JSON.

Log in to Coupler.io or create a new account (no credit card is required).

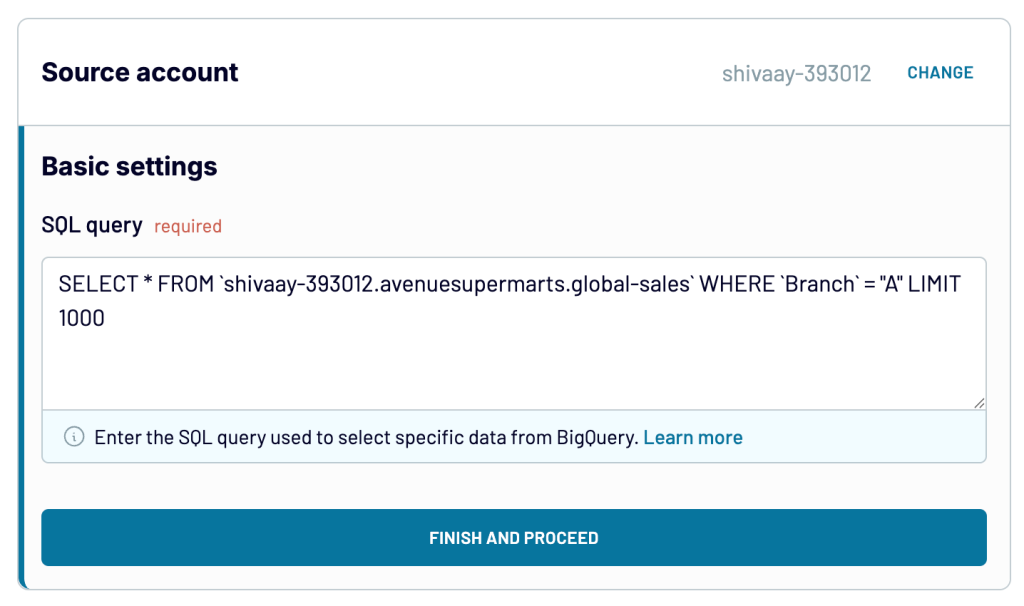

In the Source account, connect your BigQuery account using a Google Cloud key file. Read the instructions to get the key file.

Enter the SQL query for the required data and proceed.

Step 2: Transform data

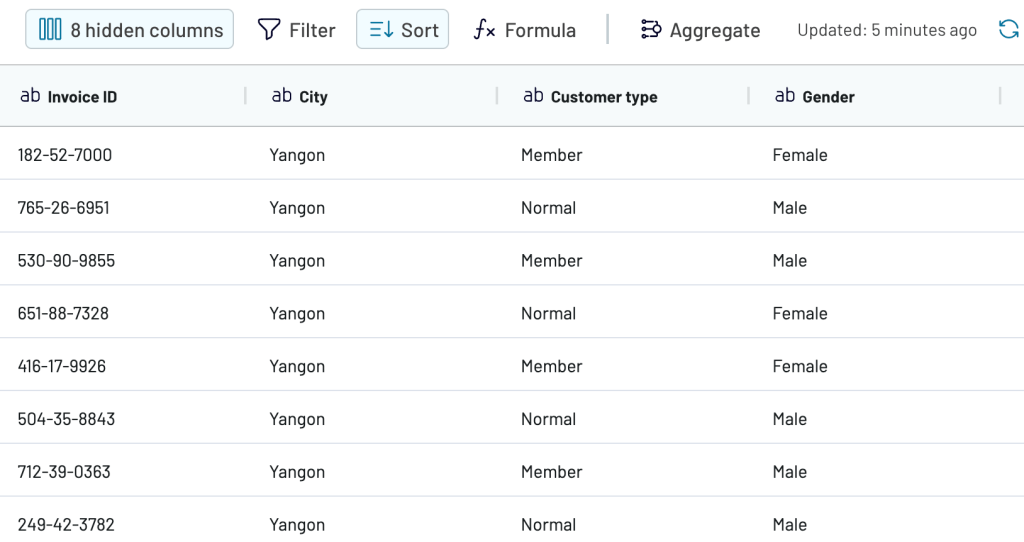

Before loading the data into JSON, preview it and make modifications if needed.

Here, you can:

- Rename or rearrange columns

- Change column datatypes

- Hide unnecessary columns

- Sort and filter data

- Add new calculated columns

It’s also possible to merge data from multiple BigQuery accounts or other data sources.

Once the data looks good, proceed to the final step.

Step 3: Get a live integration URL

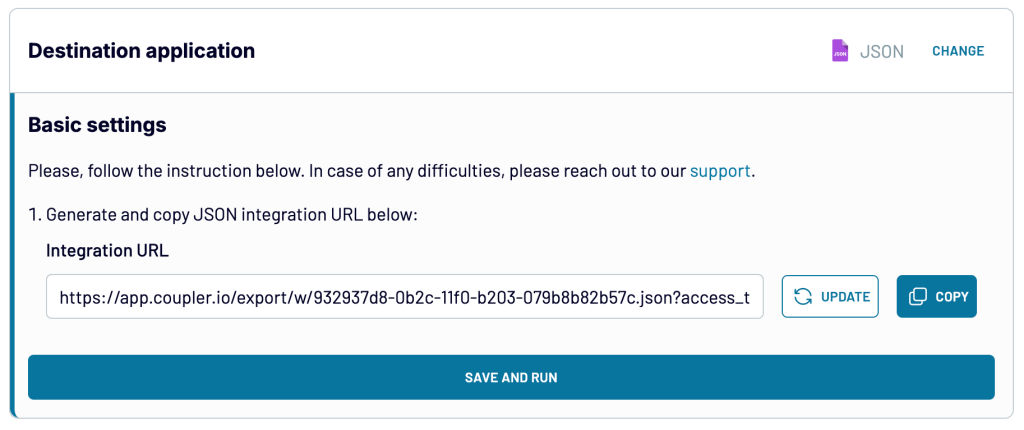

Generate an integration URL, which is essentially an online published JSON file containing your data exported from BigQuery.

Now, save and run the data flow.

On a successful run, the JSON data will be available at the integration URL. If you open it in the browser, a JSON file with your data will be downloaded to your computer.

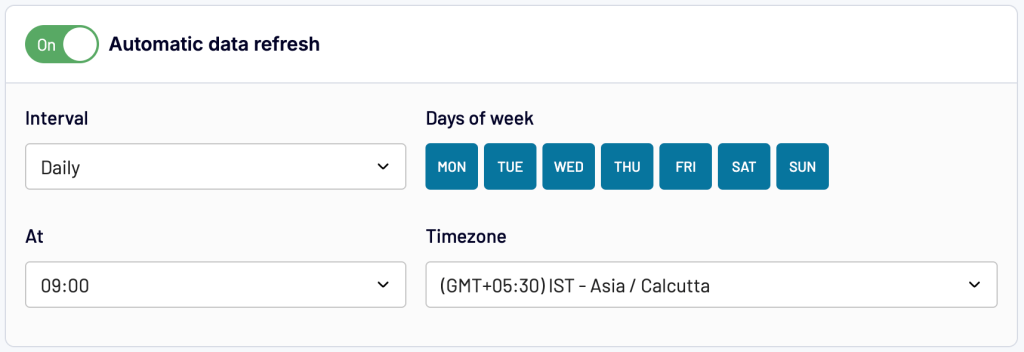

You can also schedule automatic data refresh from BigQuery to JSON in the Flow Settings. This ensures that your JSON file with data from BQ is always up to date.

This allows you to integrate BigQuery to JSON via URL, which updates on its own. The URL also acts like a REST API endpoint that returns JSON, which is easier to integrate with custom applications as compared to directly using the BigQuery API.

In addition to BigQuery, Coupler.io can pull data from over 60 popular sources into JSON and streamline data flows. The no-code interface makes it possible to set up and automate the integration in just a few minutes, saving a lot of time.

How to manually export data from BigQuery to JSON?

You can easily export BigQuery query results into JSON format. However, this method is only suitable for static data needs, where automatic updates are not required. Every time you need fresh data, you’ll have to repeat the process, which is time-consuming.

It can be done either through the BigQuery console or the command-line tool. There is also a way to export BigQuery to Google Cloud Storage and then download JSON from there, but it’s unnecessarily lengthy.

Method 1: Using BigQuery interface

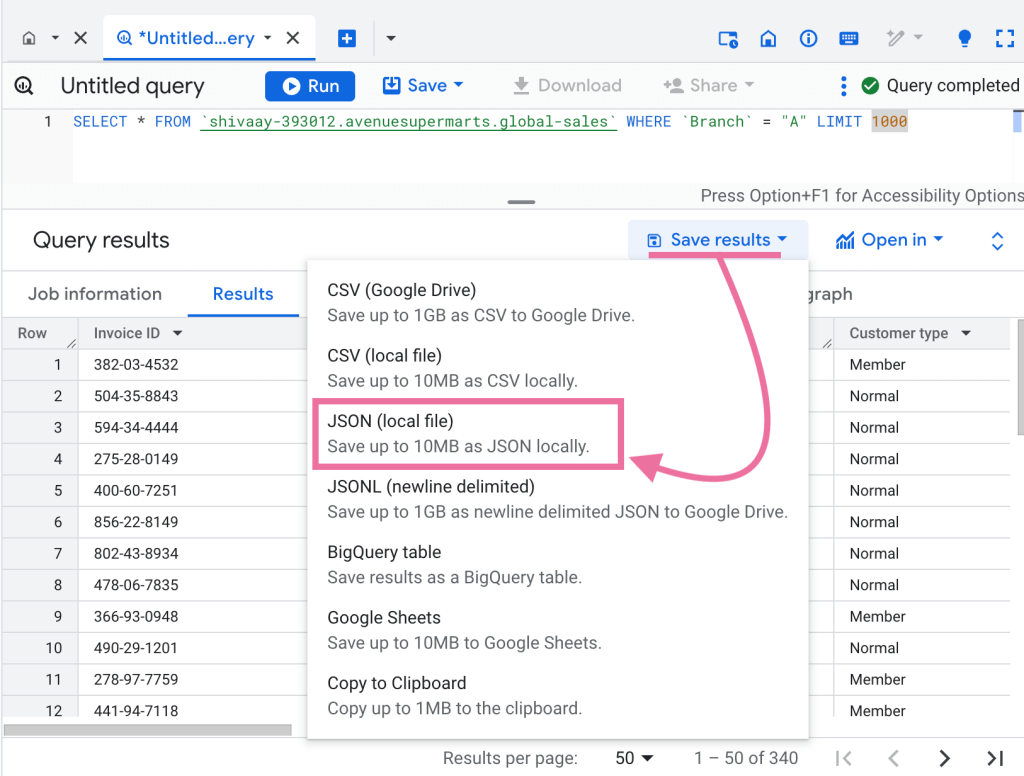

Open the BigQuery table in Google Cloud Console just like we’ve described in our BigQuery tutorial. Ensure you’re in the right project and run the desired SQL query.

Click Save results at the top of the Query results and select JSON (local file).

The JSON data file will start downloading to your local computer. This method is straightforward, but one major drawback is that you can’t export data larger than 10MB.

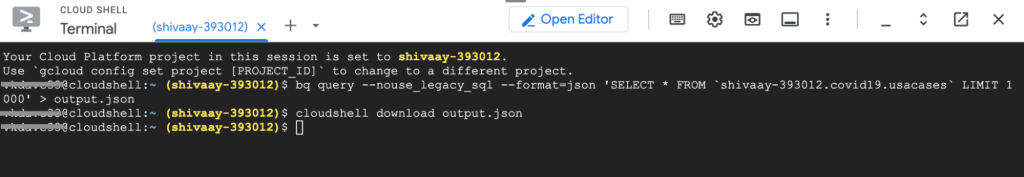

Method 2: Using Google Cloud CLI

You can use the Google Cloud Shell console to query and save results to JSON. Then, you can download the JSON file to your local computer.

In the Google Cloud console, press “G” and then “S” to activate the Cloud Shell.

Now, execute the following command:

bq query --use_legacy_sql=false --format=json "SQL-QUERY-TO-RUN" > output.json

This will run the SQL query and save the results to output.json.

To download the file, run:

cloudshell download output.json

The BigQuery to JSON file will start downloading. This method is ideal for more technically advanced users (like developers) for quick exports but will likely not be suitable for business users.

Use BigQuery API to get data in JSON format

BigQuery APIs allow developers and data engineers to query and access the data in JSON format. It offers REST APIs to integrate with your application and client libraries for Python, Ruby, PHP, Java, and other languages.

To connect BigQuery to JSON using the API, follow these steps:

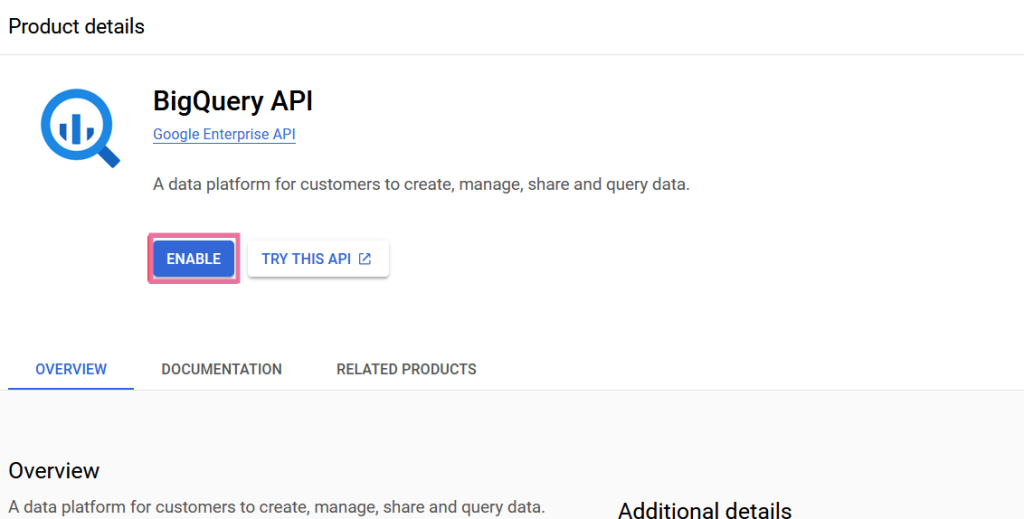

- Enable BigQuery API – In the Google Cloud Console, open the left menu and go to APIs & Services > Library. Search for “BigQuery API” and enable it.

- Create a service account – To use the API, you need a service account. Go to IAM and Admin > Service Accounts and create a new account with the BigQuery Data Viewer role for the project.

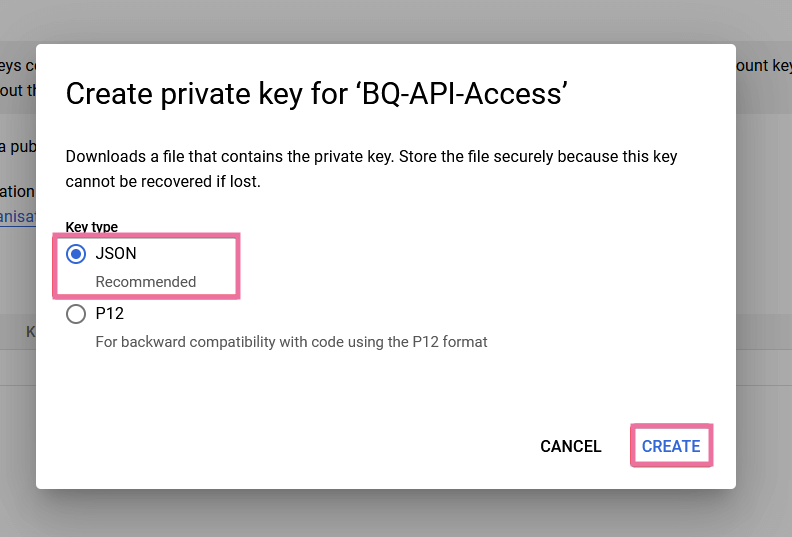

- Generate a JSON key – In the service account, open the Key tab and create a new JSON key to use for API authentication. This will download the JSON key file.

Now, you can write a custom program to integrate BigQuery to JSON via API.

For instance, if you’re using Python, run the following command:

pip3 install --upgrade google-cloud-bigquery

Then, use the BigQuery library in your script to retrieve data as JSON. For example, here’s a script that I used that you can take as a reference. If you’re using it, make sure to replace the key path and a standard SQL query.

from google.cloud import bigquery

import json

import os

from datetime import date, datetime

os.environ["GOOGLE_APPLICATION_CREDENTIALS"] = "path/to/your-service-account-key.json"

client = bigquery.Client()

query = """

SELECT * FROM `shivaay-393012.avenuesupermarts.global-sales`

WHERE Branch = 'A'

LIMIT 1000

"""

query_job = client.query(query)

results = query_job.result()

def custom_serializer(obj):

if isinstance(obj, (date, datetime)):

return obj.isoformat()

raise TypeError(f"Type {type(obj)} not serializable")

rows = [dict(row) for row in results]

with open("output.json", "w") as f:

f.write(json.dumps(rows, indent=4, default=custom_serializer))

This script saves the BigQuery results in a JSON datatype file and saves locally. If the data contains nested JSON or repeated fields (in arrays and structs), modify the script to handle it.

You can deploy the program on a server and set up cron jobs to build an automated data pipeline to connect BigQuery to JSON. However, this is not usually feasible because of the associated coding complexities, hosting costs, and technical burden.

What’s the best option to integrate BigQuery to JSON?

If you want to use BigQuery data with a custom program, you may need a live integration. The manual export method is suitable only when you want to work with static data that does not change.

Coupler.io offers the easiest option for integrating BigQuery to JSON. Its no-code interface allows you to set up the importer and automate the integration, which takes less than five minutes. Furthermore, the platform supports no-code integration with over 60 other data sources, which you may need.

Start automating data integrations without coding with a Coupler.io free trial account – no credit card required.

Automate exports of queries from and to BigQuery with Coupler.io

Get started for free