Copying data in spreadsheets is a piece of cake – you can either copy and paste a cell range or make a copy of a sheet. In SQL-based data warehouses, which BigQuery is, the process of copying tables is different, but not complex. The major difference is that you can copy a BigQuery table in different ways depending on your purpose for this action. In this tutorial, I’ve collected all of the options you can rely on and explained their details so you can choose the best one for your project. Let’s get started!

Or you can watch our video explaining how you can copy BigQuery tables using Coupler.io right away.

What are the options in BigQuery to copy a table?

- BigQuery console – the most primitive way to manually copy a BigQuery table with a few button clicks. Using this option, you can copy BigQuery tables to another dataset and project.

- SQL query – an alternative way to copy BigQuery tables using SQL queries.

- Coupler.io – the most actionable option to copy a BigQuery table with the help of an ETL connector, Coupler.io. It allows you to not only copy tables in BigQuery, but also automate dataflow between them, transform and consolidate data, and other things valuable for reporting and analytics.

- CLI tools – a manual way to copy BigQuery tables using the BigQuery command-line tool.

- BigQuery API – a programmatic way to copy tables in BigQuery via sending POST requests to the BigQuery API. This is the most advanced option since it requires you to have coding skills.

Definitely, each option is a go for a specific case. If you need to copy data from one table to another in BigQuery one time, the Console or SQL option will do. For recurring activity, let’s say to automate data flow from one table to another on a daily basis, Coupler.io is the ideal solution. I’ll guide you through each way to see what they look like in practice. This will allow you to better understand how they work and which one you’d like to employ for your needs. Let’s start with the most actionable option.

Option #1 – Copy a table in BigQuery using ETL by Coupler.io

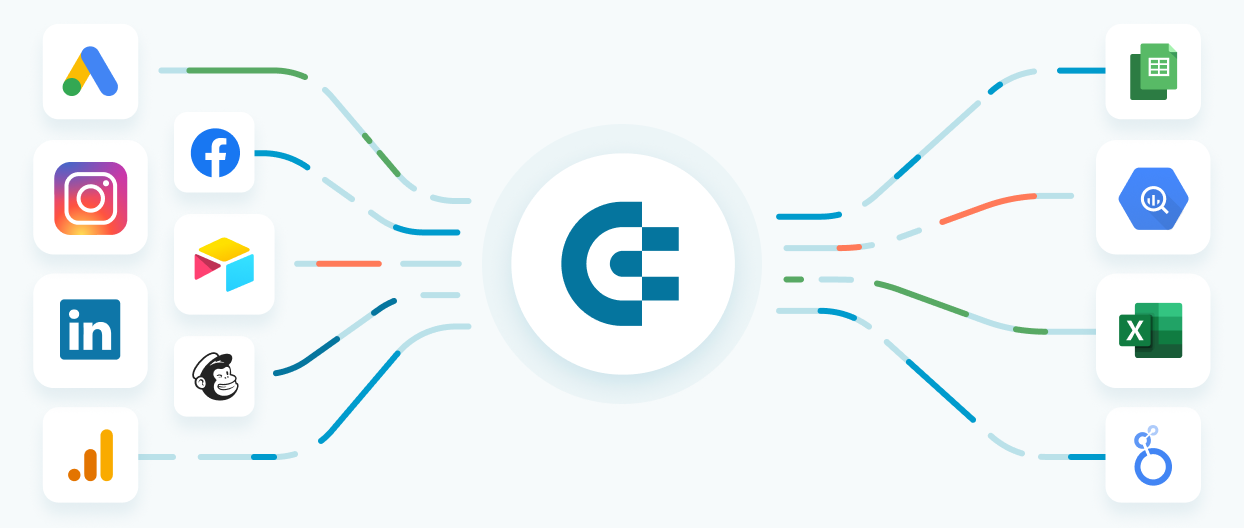

With Coupler.io, a reporting automation solution, you can not only copy tables in BigQuery but also connect them. It provides an ETL solution to automate exports of data from multiple sources into:

- Spreadsheets (Google Sheets, Microsoft Excel)

- Data warehouses (Google BigQuery)

- BI tools (Looker Studio, Power BI)

Using the BigQuery integration by Coupler.io, you can copy a source BigQuery table and synchronize it with the created copy.

Sign up to Coupler.io, click Add new importer, and select the source app and destination apps. In our case, BigQuery works for both apps.

After that, complete 4 simple steps as follows:

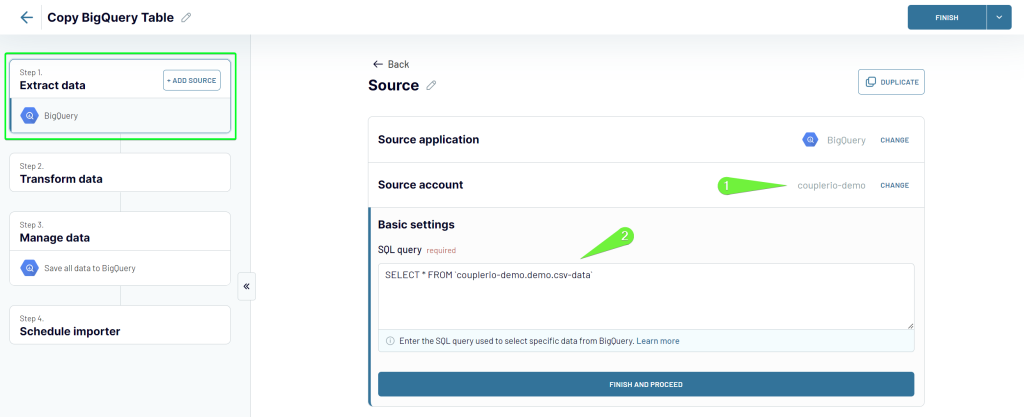

Step 1. Extract data from a source BigQuery table

- Connect to a BigQuery account to access a BigQuery project where your source table is located. For this, you’ll need to select a Google Cloud key file stored on your device and click Save.

Here are detailed instructions on how to get a Google Cloud key file.

- Enter the SQL query to copy an entire table. Use the following syntax

SELECT * FROM `{project}.{data-set}.{table}`

In my example, to copy a BigQuery table named csv-data located in the demo dataset of the couplerio-demo project, the SQL query will look like this

SELECT * FROM `couplerio-demo.demo.csv-data`

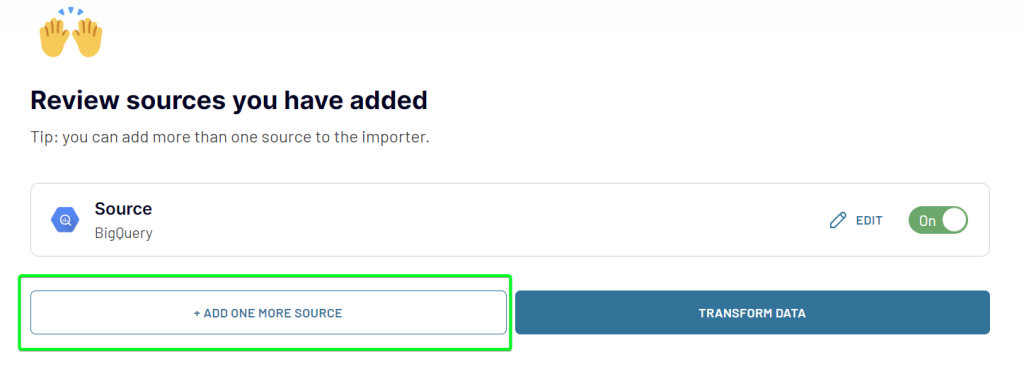

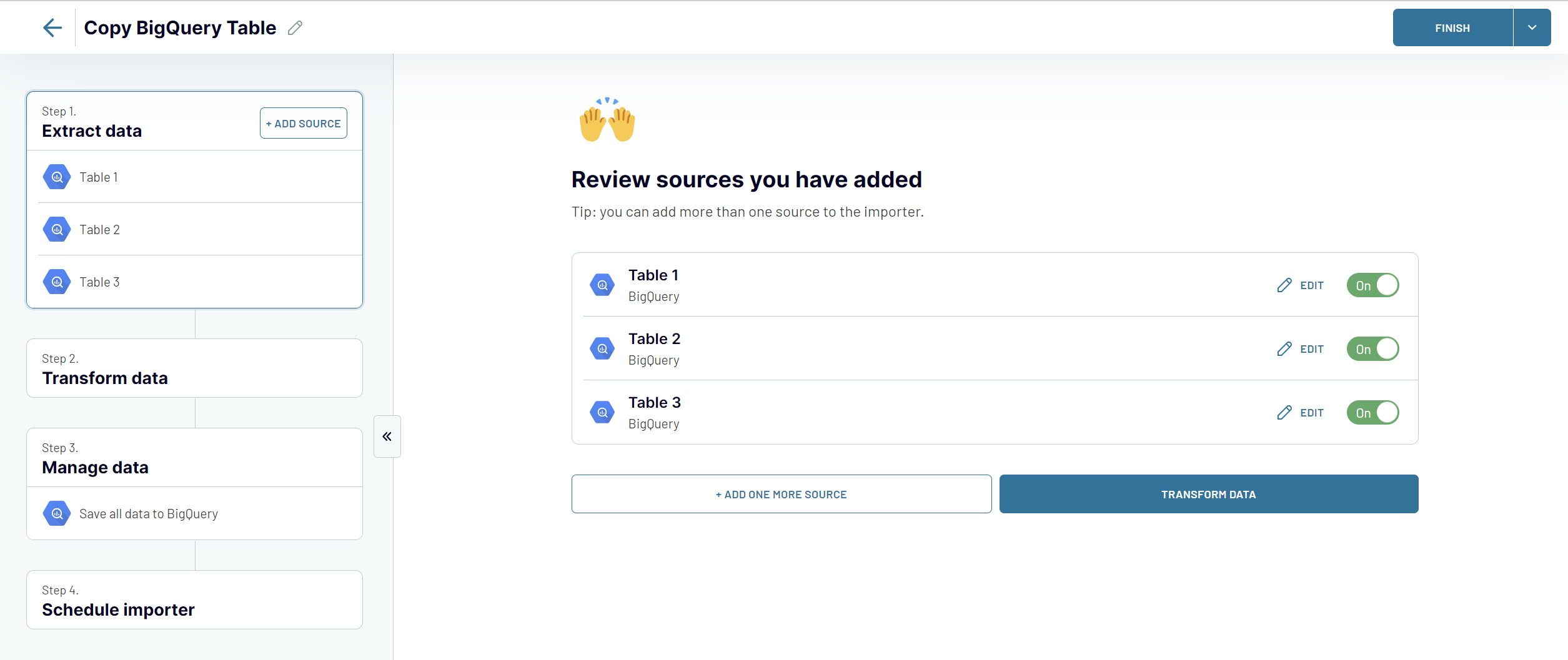

If you want to copy data from multiple tables on different BigQuery projects, Coupler.io allows you to do this easily. Click Add one more source and configure the connection.

I’m not going to copy multiple tables in this example, so I just click Transform data.

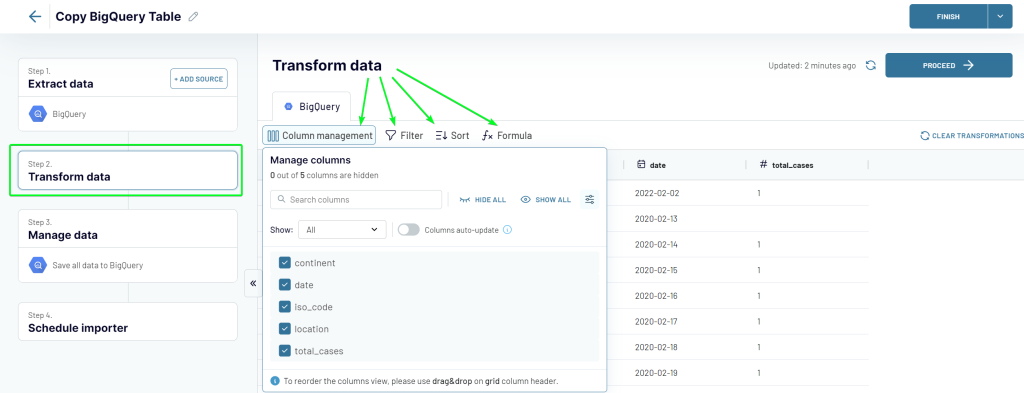

Step 2. Transform data

This brings me to the step where I can preview the data to copy and even transform it. Coupler.io supports the following transformations that you can do in UI:

- Column management (hide/unhide, rename, edit columns)

- Data filtering and sorting

- Formula (adding new columns using supported formulas)

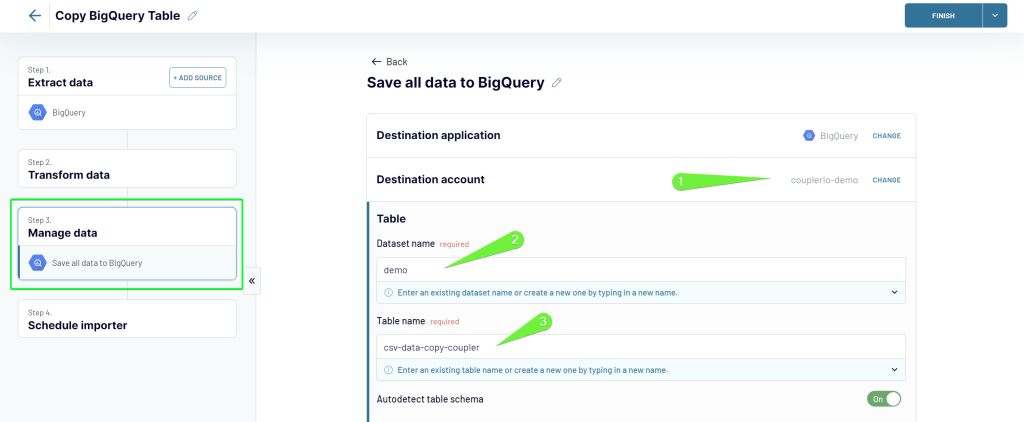

Step 3. Manage data to load to the destination table

- If you’re making a BigQuery table copy within the same project, select the project from the drop-down list. If you’re going to copy the table to another project, you’ll need to connect it using Google Cloud key file, as I did when configuring the source.

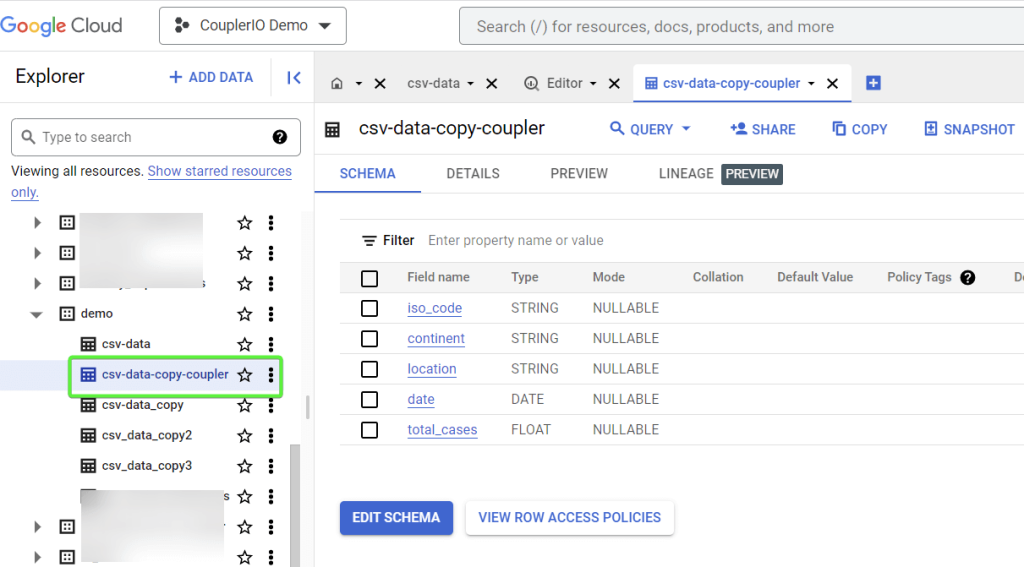

- Then you’ll need to either specify an existing dataset and a table or create new ones by typing in the new names. I want to use the same demo dataset and create a new table named csv-data-copy-coupler.

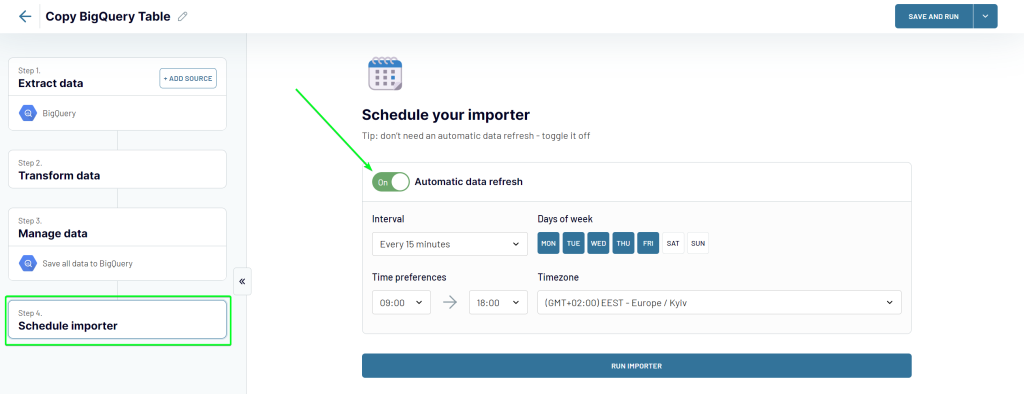

The only thing left to make a BigQuery table copy is to click Run importer. But you can also automate the data flow from one table to another. For this, you only need to enable the Automatic data refresh and configure the desired schedule. With Coupler.io, you can have your data refreshed as frequently as every 15 minutes!

You can do this both during the importer’s initial setup and after the import has already been run.

Once the import is complete, you can click the View Results button to open the copied table. And here is what it looks like in BigQuery.

Can I copy multiple BigQuery tables into one?

I mentioned above that Coupler.io allows you to add multiple data sources to your importer. The idea is that you can copy data from multiple tables within one BigQuery project/dataset or from different ones, and consolidate this data into one destination table. For this, you need to click the Add one more source button after you’ve configured the source table to copy. Here is what it might look like:

You can add as many source tables as you want but all of them will be merged into one destination table. If you need to make copies of multiple tables without merging them, you’ll have to create one importer per each table separately.

Option #2 – Copy a BigQuery table to another table using the console

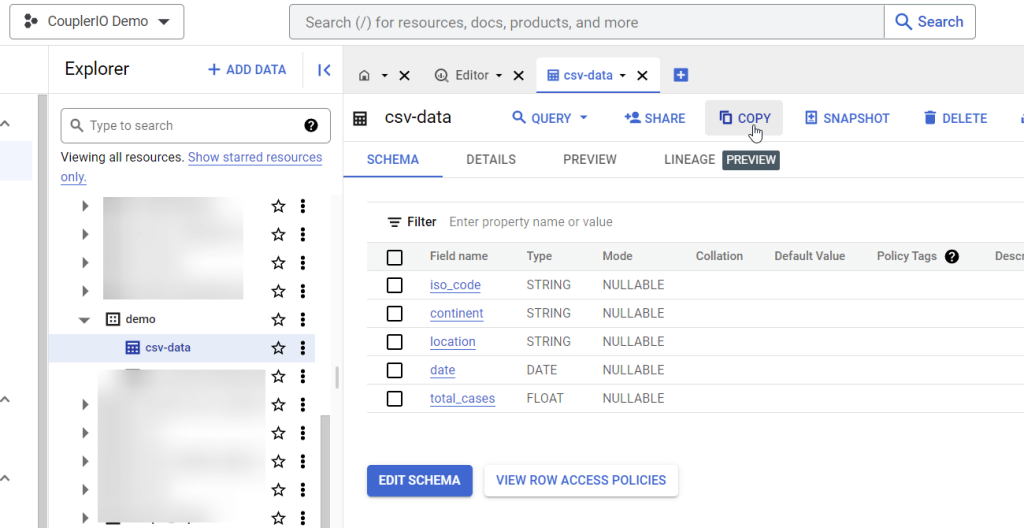

The most primitive and straightforward way to copy a BigQuery table is via the console (BigQuery UI).

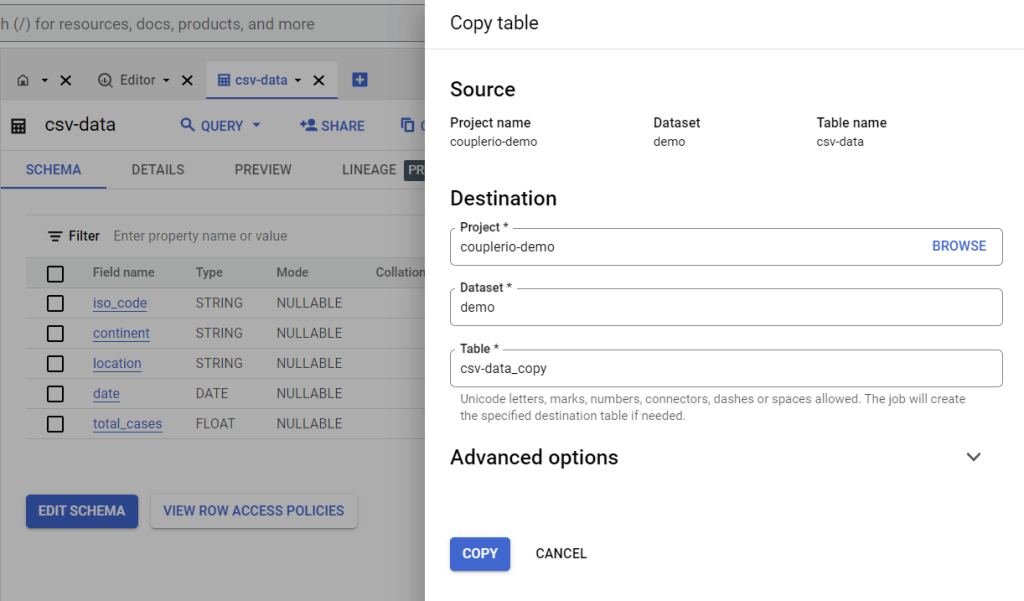

I have a BigQuery table named csv-data located in the demo dataset of the couplerio-demo project. To simply create a copy of this BigQuery table, click the Copy button on the console.

Then you need to specify the destination for your copy namely:

- Project

- Dataset

- Table (the name for the copied table)

Click Copy to put this in action.

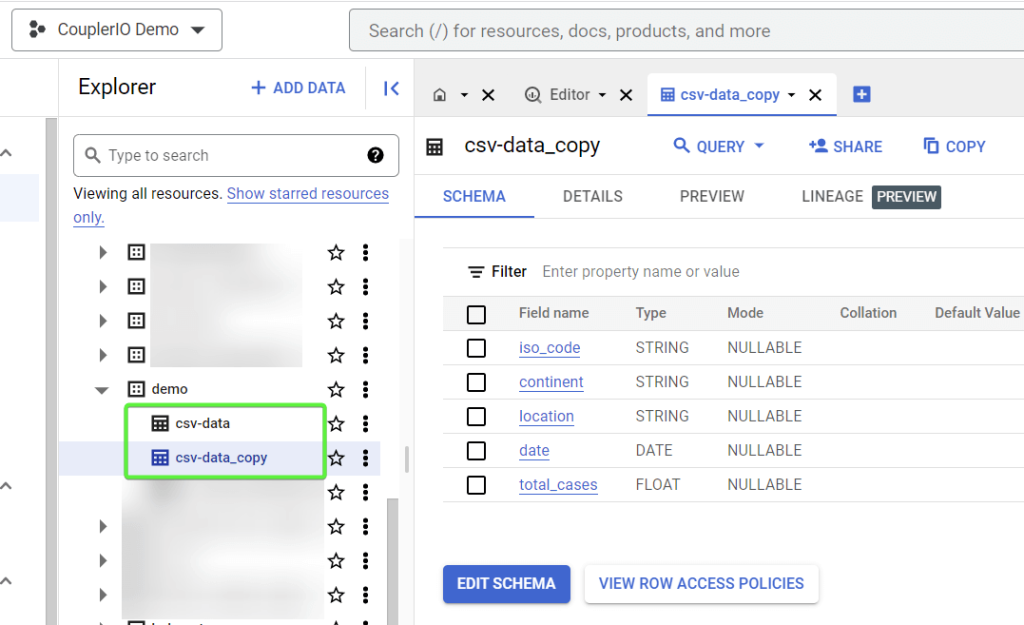

There you go! Here is what the copied table looks like.

Option #3 – Copy a table in BigQuery using SQL query

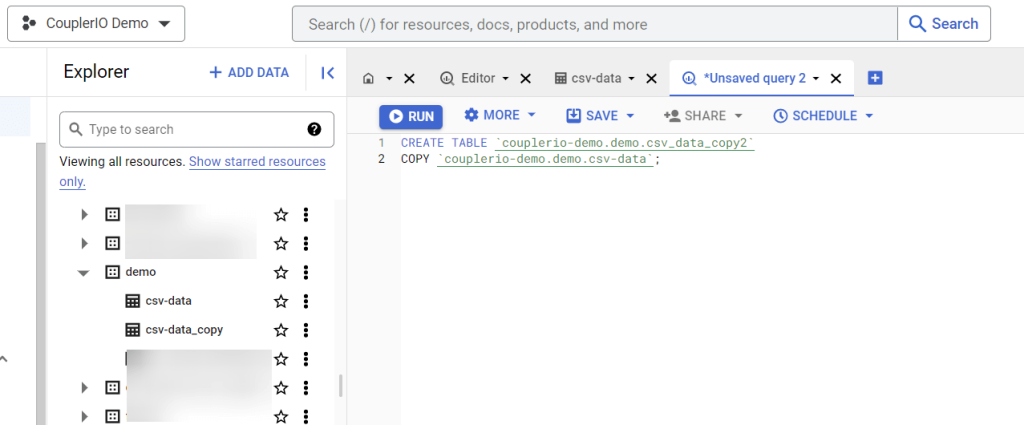

This method allows you to use the power of SQL to create a new table and copy data from another table into it. Here is what the syntax for such a query looks like:

CREATE TABLE `project-name.dataset-name.new-table-name` COPY `project-name.dataset-name.table-name`;

CREATE TABLEproject-name– specify the name of the project where to create a new tabledataset-name– specify the name of the dataset where to create a new tablenew-table-name– specify the name for the new table

COPYproject-name– specify the name of the project where the table to be copied is locateddataset-name– specify the name of the dataset where the table to be copied is locatedtable-name– specify the name of the table to be copied

Here is an example of what it may look like in practice:

CREATE TABLE `couplerio-demo.demo.csv_data_copy2` COPY `couplerio-demo.demo.csv-data`;

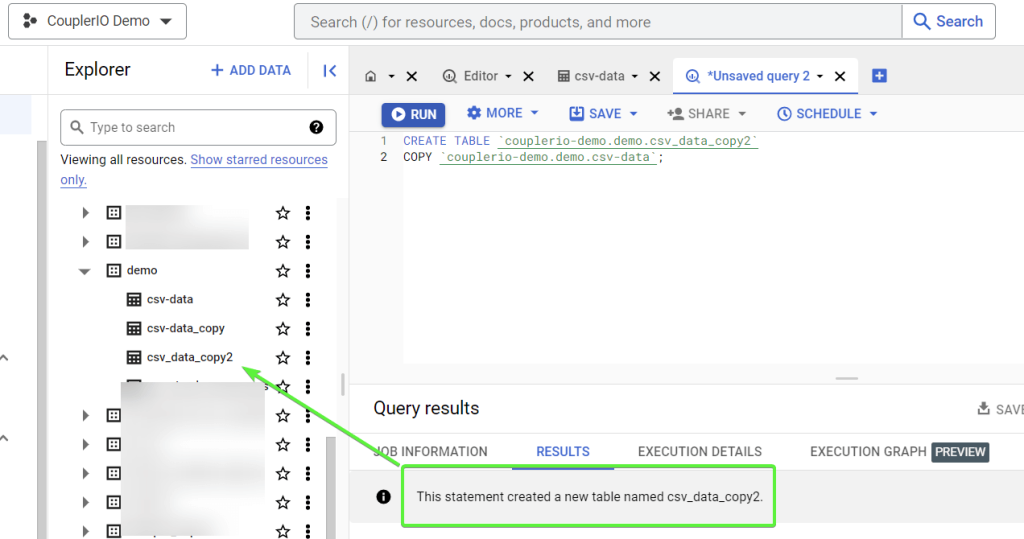

Click Run to run your SQL query and enjoy the result.

I’ve already introduced three ways to copy a BigQuery table to another table. Before we proceed to the other methods, let’s answer two questions that you may have after reading this.

How to copy a BigQuery table to another dataset

All the introduced options allow you to copy BigQuery table to another dataset.

- BigQuery console: you need to specify the desired dataset in the Destination section.

- SQL query: you need to specify the desired dataset when making your query.

- Coupler.io: you need to specify the desired dataset when configuring the destination table.

How to copy a BigQuery table to another project

All the introduced options allow you to copy BigQuery tables to another project if you have respective access to it.

- BigQuery console: you need to specify the desired project in the Destination section. You can click Browse to do this.

- SQL query: you need to specify the desired project when making your query.

- Coupler.io: you need to connect a Google BigQuery account with access to the associated BigQuery project using Google Cloud key file.

Now I can proceed to the other two methods you can consider to copy BigQuery tables: CLI and API.

Option #4 – Copy a BigQuery table using the command-line tool

BigQuery provides a Python-based command-line tool to manipulate data. You can enter CLI commands in two ways:

- from the Google Cloud console – for this, you need to activate Cloud Shell

- from the Google Cloud CLI – for this, you need to install and configure the Google Cloud CLI.

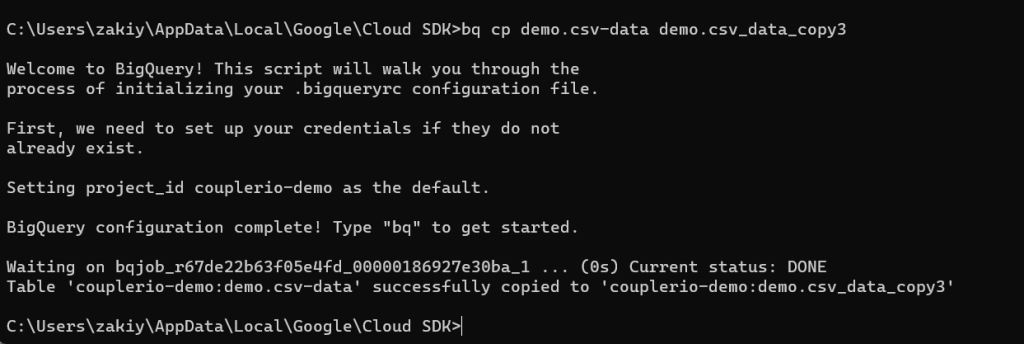

I’m going to demonstrate how you can copy a BigQuery table using the Google Cloud CLI.

Prerequisites: Make sure to install the latest version of the Google Cloud SDK and authenticate with your Google Cloud account. After the installation, you’ll need to set your default project.

Here is the syntax of a command to copy a BigQuery table:

bq cp [source-table] [destination-table]

[source-table]should be specified in the following format:dataset-name.table-name[destination-table]should be specified in the following format:dataset-name.table-name

Here is what the command looks like in my example

bq cp demo.csv-data demo.csv_data_copy3

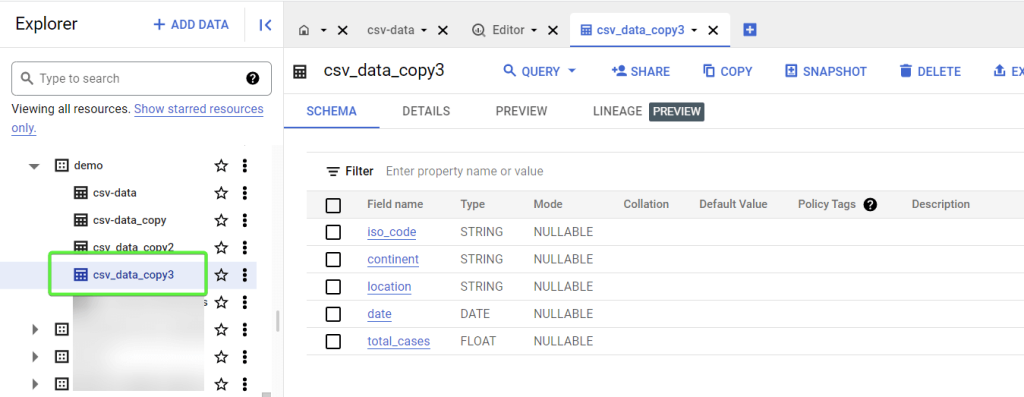

And here is the result – a copied BigQuery table.

This is a basic example. However, with CLI commands, you can specify additional options when copying tables in BigQuery. For example, here is a syntax for a command to copy a table to another project using the schema defined in the schema file

bq cp --destination_project=[destination-project] --destination_table=[destination-table] --schema=[schema-file] [source-table]

[destination-project]– specify the name of the BigQuery project where you want to copy a table[destination-table]should be specified in the following format:dataset-name.table-name[schema-file]– specify a .json file with a table schema[source-table]should be specified in the following format:dataset-name.table-name

Option #5 – Copy a table using the BigQuery API

Eventually, the most thorny way to copy a table in BigQuery – the API. To use this option, you’ll need the following:

- A Google Cloud Platform (GCP) project with the BigQuery API enabled.

- A service or user account with permission to read the source table and write to the destination table.

- The BigQuery API client library for your programming language, for example, Python, installed in your development environment.

Is everything checked? Then buckle up ?

Example of how to copy a BigQuery table to a dataset with Python

Let’s check out an example of using Python to copy a BigQuery table via the BigQuery API.

- First, authenticate with your Google Cloud account and create a new BigQuery client object. Check out the BigQuery API documentation where you will find the respective authentication and client creation instructions.

- Define the ‘CopyJob‘ configuration object with the following properties:

source_table: in the following format:project-name:dataset-name.table-namedestination_table: in the following format:project-name:dataset-name.table-name

job_config: define any additional configuration options, such as the schema or write disposition.

Here is an example of how this may look in Python:

from google.cloud import bigquery client = bigquery.Client() job_config = bigquery.CopyJobConfig() job_config.write_disposition = bigquery.WriteDisposition.WRITE_TRUNCATE source_table = "my-project:my-dataset.my-table" destination_table = "my-project:my-dataset.my-table-copy" copy_job = client.copy_table(source_table, destination_table, job_config=job_config)

The next step is to submit the ‘CopyJob‘ to BigQuery by calling the result() method on the ‘CopyJob‘ object. This will execute the copy job asynchronously and return a ‘Table‘ object representing the copied table.

copied_table = copy_job.result()

That’s it. Oh, if you want to monitor the progress of the ‘CopyJob‘, you can optionally use the ‘CopyJob‘ object’s state property and the ‘job_id‘ property to periodically check the status using the client.get_job() method. Here is what it may look like:

while copy_job.state != "DONE":

print(f"Job {copy_job.job_id} is {copy_job.state.lower()}...")

time.sleep(1)

if copy_job.error_result:

print(f"Job {copy_job.job_id} failed: {copy_job.error_result['message']}")

else:

print(f"Table {destination_table} was successfully copied!")

The status of the job will be printed every second until it is done or failed.

FAQ on BigQuery copy table

The following questions and answers to them should be quite useful since they provide you with a full picture of how you can copy tables in BigQuery.

Can I copy a BigQuery table without data?

There is only one reason why anyone would need to copy a table without data in BigQuery – they need to copy its schema to reuse it. All the options I introduced in the article will let you make a copy of a BigQuery table, which contains no data.

The more interesting case is when you need to only copy the schema of a non-empty BigQuery table but without this data.

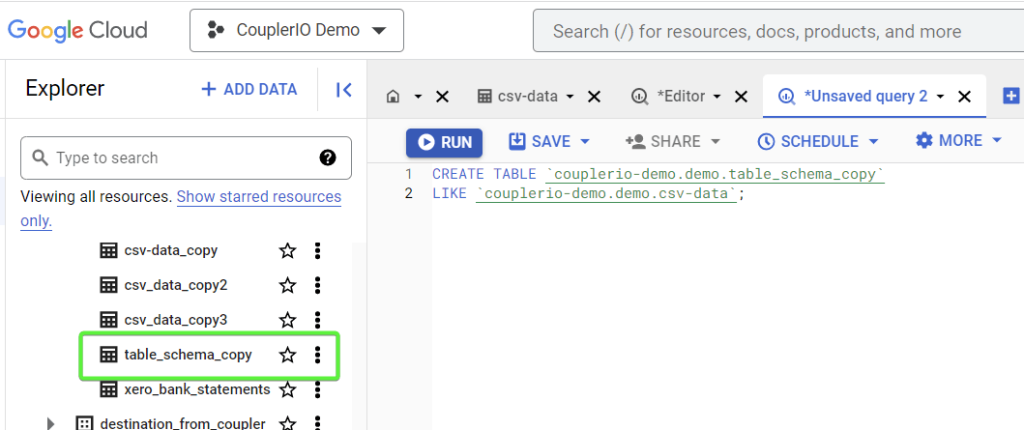

Copy the BigQuery table schema

In BigQuery, you can benefit from the CREATE TABLE LIKE statement to only copy the schema of a source table. Here is the syntax you should use for your SQL query:

CREATE TABLE `project-name.dataset-name.new-table-name` LIKE `project-name.dataset-name.table-name`;

CREATE TABLEproject-name– specify the name of the project where to create a new tabledataset-name– specify the name of the dataset where to create a new tablenew-table-name– specify the name for the new table

LIKEproject-name– specify the name of the project where the table to be copied is locateddataset-name– specify the name of the dataset where the table to be copied is locatedtable-name– specify the name of the table to be copied

Here is what the SQL query example may look like:

CREATE TABLE `couplerio-demo.demo.table_schema_copy` LIKE `couplerio-demo.demo.csv-data`;

When you run it, you’ll get a copy of the specified tables with no data in it.

Limitations on BigQuery tables copy

If you ask chatGPT on limitations associated with BigQuery table copy, it would likely reply something like:

It’s a good idea to review the BigQuery documentation and check any relevant quotas and permissions before attempting to copy a table in BigQuery.

And it’s true. So I’ve done this and here are the core limitations/requirements you should be aware of:

- Permissions: To copy a BigQuery table, you need two permissions:

- to read the source table

- to write to the destination table

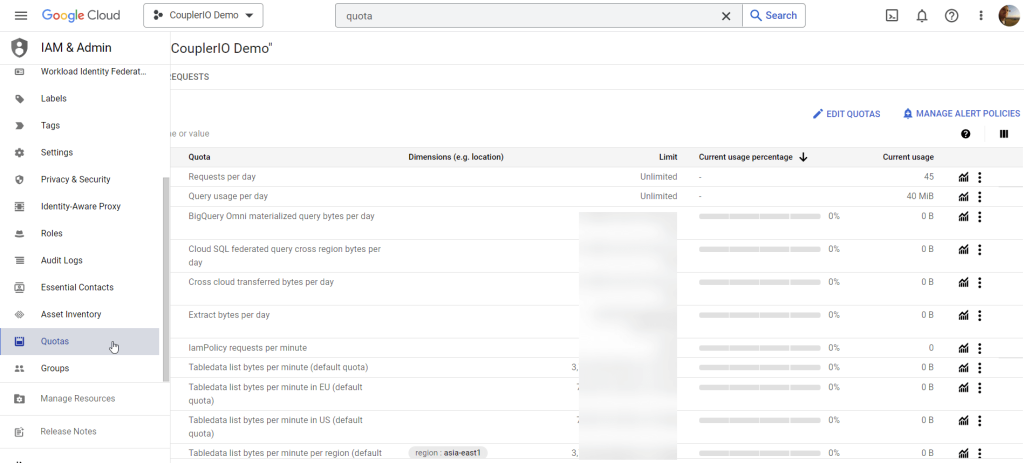

- Quotas: Your BigQuery account has quotas on the number of table copy operations. Those quotas can be defined per day, per project, and per destination dataset. You can check out your current quotas in IAM & Admin of the GCP Console.

- Limitations of specific copy methods: These limitations are associated with the method you use to copy a BigQuery table.

Which method to copy BigQuery tables is the best?

To put it simply, I believe that Coupler.io is the best method to copy your BigQuery table ?

But, I can’t be biased, therefore, I would say that the best method should be defined according to your requirements and needs. So, if you need to make a table copy just once, then there is no sense in signing up for Coupler.io. A few button clicks on the BigQuery console will do the job quickly and efficiently.

However, if you deal with this activity on a recurring basis, then it makes sense to opt for the SQL option. Coupler.io will be the best if you want to automate table copying for reporting or analytics.

Choose the best method for you and do this wisely. Good luck!

Streamline your data analytics & reporting with Coupler.io!

Coupler.io is an all-in-one data analytics and automation platform designed to close the gap between getting data and using its full potential. Gather, transform, understand, and act on data to make better decisions and drive your business forward!

- Save hours of your time on data analytics by integrating business applications with data warehouses, data visualization tools, or spreadsheets. Enjoy 700+ available integrations!

- Preview, transform, and filter your data before sending it to the destination. Get excited about how easy data analytics can be.

- Access data that is always up to date by enabling refreshing data on a schedule as often as every 15 minutes.

- Visualize your data by loading it to BI tools or exporting it directly to Looker Studio. Making data-driven decisions has never been easier.

- Easily track and improve your business metrics by creating live dashboards on your own or with the help of our experts.

Try Coupler.io today for free and join 1M+ happy users to accelerate growth with data-driven decisions.

Start for free